Boxing gRSShopper

What, again? Yes, again. Today I'm working on creating and saving a reusable Docker image of gRSShopper. I have the benefit of some previous work on this set-up, and so it might work today, so I'm documenting my process.

Note: I'm doing all this so you don't have to. You should be able to run gRSShopper in just a few seconds.

All you need to do to run it is to install Docker and then enter the following command:

docker run --publish 80:80 --detach --name gr1 grsshopper

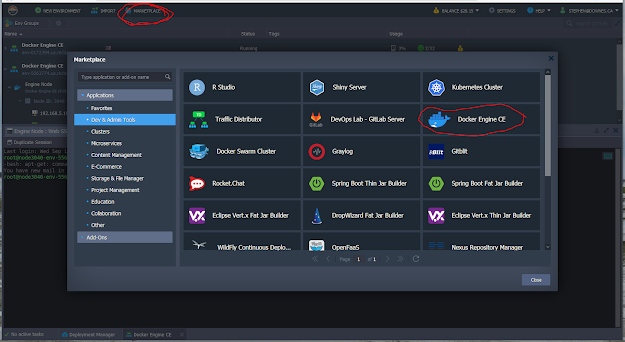

If you can't install Docker just create an account on Reclaim Cloud and select Docker CE from the marketplace (see below), then run the Docker run command.

(Note: I say 'today' in the first paragraph, but this article is actually the result of a good month's work. So Enjoy.)

Step One - Reclaim Cloud

I'm using Reclaim Cloud, because I think it will be cheaper and easier. https://reclaim.cloud/ I experimented with it all afternoon last week and it cost me five cents. I've set up an account with them, and seeded it with $25 using a credit card.

Logging in: https://app.my.reclaim.cloud/ (saved it to my 'start' page because there's no direct link from the cloud home page).

Step Two - Create a Docker Image

I initially followed Tony Hirst here for the first couple of steps https://blog.ouseful.info/2020/06/09/first-forays-into-the-reclaim-cloud-beta-running-a-personal-jupyter-notebook-server/ but I learned through trial and error that this method won't work. He selected 'Create a new environment', which would work for many things, but wouldn't allow me to build a good base Docker image.

I started with the Marketplace...

Next, I needed an image to start with. It's much easier to start with someone else's work. This was not easy. I did a lot of searching for suitable images. I tried every Perl image I could find, but none of them would start up with a full Apache and MySQL environment out of the box. I would have to build my own (this, by the way, has stymied me for years).

After much more searching and testing, I decided on the Fauria LAMP image. It checked all my boxes:

- It was relatively recent

- When you started it, Apache and MySQL would start up on their own

- There was a Dockerfile and GitHub repository so I could clone it and work from source

- It had good documentation telling me how to run it

You see, what I really needed was two things. First, I needed a Docker image running gRSShopper that anyone could download and run with a couple of simple commands. But also, I needed a way to build these from scratch, so I can do things like user newer versions of Linux, add additional applications, and update the gRSShopper scripts. Fauria fit the bill.

To install and run Fauria in the Docker Engine, I type two commands:

docker pull fauria/lamp

docker run --publish 80:80 --detach --name f1 fauria/lamp

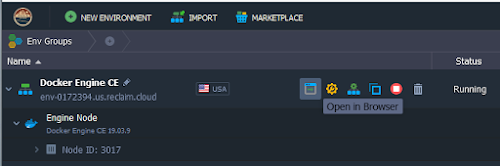

The first command retrieves a fully-functioning image from called 'fairia/lamp' the Docker hub on the web. The second command runs the image. It makes port 80 available to the external world, it 'detaches' so it runs in the background, and it names it f1. Because it's now accessible to the external world, I can now use the 'Run in Browser' and open it in my web browser.

Except, if you're me, you get this when you click on the button:

I tore out my hair trying to figure this out. It took days. Finally, I realized: when you click on that button for the first time, it tries to open:

https://env-8552976.us.reclaim.cloud/

But I'm using port 80, which is just the regular (non-secure) web. So I needed to try:

http://env-8552976.us.reclaim.cloud/

And that worked! (And the button continued to work after that, so this seems to be a one-time thing). (Note that your env number will be different from my env number). And then you get the Apache startup page...

And if you type http://env-8552976.us.reclaim.cloud/index.php you get the full configuration:

That told me everything was up and running and that I had a proper web server on my hands. Now, how to work with it? I need to be able to get inside the container to do stuff.

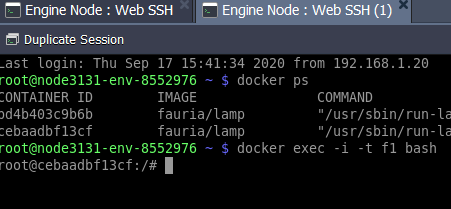

There are various approached, but the easier was the suggestion right on the furia website (I did spend time on the alternatives). At the prompt, type the command:

docker exec -i -t f1 bash

This tells Docker to execute the 'bash' command inside the container named f1. 'bash' is the name of the Linux shell environment. So you are opening a terminal window in the running container itself. You can tell because the prompt changes. It's now a # and the process name of the container is in front of it:

(In the image above I used the command docker ps to list my running containers - as I test things for this article I actually have two containers running, each with a different ID).

Now that I'm in the container itself, I can work directly with the environment. For example, I could type the command mysql -u root to work with the database. Or I could use the command cd /var/www/html to see or edit the website index.html and index.php files I'm viewing through the browser.

Step 3 - Enable Perl

The next step is to make Perl run in this environment. This took a lot of digging around through various places on the web. The first step was to make sure Perl was actually available inside the container. Sp I tested with the command

perl -v

And yes, it's available.

How to test?

Well, I know now that it won't work, but here's what I did. First, I needed a CGI directory for the Perl scripts (the fact that there wasn't one was a Big Clue that it wouldn't work). So I made one:

mkdir /var/www/html/cgi-bin

Then I needed a script to test. The text editor vi doesn't work very well in the container shell environment, but there was an easier way to just write the script directly to a file using the 'tee' command:

tee -a testperl.cgi <<EOF

#!/usr/bin/perl

print "Content-type:text/html\n\n";

print "Hello<p>\n\n";

EOF

(I found this tee command on Stack Overflow.) (Hint - just copy from here and paste (using control/v) right into the shell, then press enter).

Then test the script with:

perl testperl.cgi

And it will output the header and text. Next, move it into the cgi-bin directory and give it permission to execute

cp testperl.cgi /var/www/html/cgi-bin/testperl.cgi

chmod 705 /var/www/html/cgi-bin/testperl.cgi

and test it with the browser (in this case, http://env-8552976.us.reclaim.cloud/cgi-bin/testperl.cgi ) and it will fail. Why? Because the Apache web server is not set up to run Perl.

This took quite a bit of searching to figure out, because the default installation of Apache generally just runs Perl. But this post from Server World did the trick (and again, it was important to find something relatively recent; I wish I could just set Google to default to 'past year').

First, you have to enable the Perl module in Apache. You do this by running a command, as follows:

a2enmod cgid

(Remember, you are running this inside the container (the # prompt)). Then it says you need to restart the Apache server

systemctl restart apache2

Don't do this. First of all, the systemctl command isn't even recognized. But even more importantly, if you restart Apache, the Docker container will crash (I can't count how many times this happened before I figured out what was happening). You have to reload the Apache server. Once I realized the container was crashing, I knew what to search for, and found this bit of help on Stack Overflow.

Here's the command to reload Apache:

/etc/init.d/apache2 reload

This worked just fine.

Now the Server World article suggests that this is all you need to do if you put all your scripts in /usr/lib/cgi-bin. I actually went a fair way down this path before deciding I wanted a more standard configuration. But you need to tell Apache to find and run CGI files in the cgi-bin directory.

This is a two-step process. First you need to create a configuration file, then you need to enable the configuration file in Apache (this was a change for me, since I'm used to Apache configuration all being in one big file, but I discovered I really had to do it this way).

Here's the text of the configuration file.

# create new

# processes .cgi and .pl as CGI scripts

<Directory "/var/www/html/cgi-bin">

Options +ExecCGI

AddHandler cgi-script .cgi .pl

</Directory>

Store this in /etc/apache2/conf-available/cgi-enabled.conf (you can use vi, or you can use the same method I used above to create testperl.cgi ).

Here's the command to enable the configuration file in Apache:

a2enconf cgi-enabled

Then the website says we're supposed to restart Apache, but we've learned, and we just reload Apache.

Now try the URL and it works!

I now have Perl running in Apache in a web container.

Step 4 - Run from GitHub

It's nice that I have a running image (and you can be sure I did not shut this image down until I was sure I could recreate it) but as I mentioned above, I'd rather be able to just create an image using scripts in GitHub. That's the proper way to build Docker images, and it allows me to customize it as I need to.

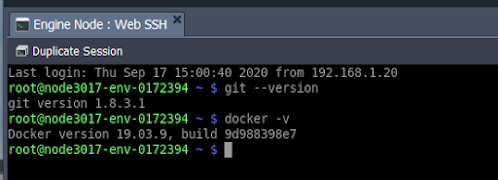

The first step was to make sure I could run the Fauria image from GitHub. There weren't instructions on the Fauria web site (why would there be?) but working with the Docker documentation I was able to manage.

First, I opened a fresh clean 'Docker Engine CE' just as I did at the beginning of Step 2, above. Only this time I did not run a docker pull command. I did something else entirely.

4a - clone the repository

I opened the Web SSH shell and used Git and cloned a copy of the Furia repository in the environment:

git clone https://github.com/fauria/docker-lamp

This created a directory called 'docker-lamp' and put all the files in it. I entered the directory.

cd docker-lamp

You can see all the files in the directory by typing ls -l and looking at the listing. The most important file is the Dockerfile, but everything is needed in some way or another.

4b - build the container

The next step is to build the container. Docker will follow the instructions in the Dockerfile, downloading and loading all the applications you need and setting up the container configuration. Here's the command:

docker build --tag bb1 .

The tag can be whatever you want it to be. I keep these brief. The period tells Docker to use the current directory (where the Dockerfile is located).

This step will take a while the first time as all of the files are downloaded. The next time you run the command it will be a lot faster because it will use the files it has already downloaded.

4c - run the container

Now we're right where we were after the docker pull command - we're ready to run the container.

docker run --publish 80:80 --detach --name bb bb1

The container will start up just as it did before, and we can set it up for Perl just as we did before (and yes, I spend a day testing this, because I've learned not to take anything for granted in the world of cloud containers).

Step 5 - Create the Dockerfile

Now what I want to do is customize the Dockerfile so I don't have to type in a bunch of commands and create a bunch of files every time I start up my container. I'll begin with the Fauria Dockerfile and make my changes.

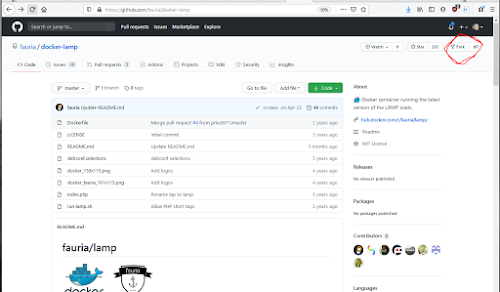

The best way to do this is to create my own GitHub repository based on the Fauria repository. First, go to https://github.com ansd create an account (I already had one, so I used that). Next, go to the Fauria repository and click on the 'Fork' button in the upper right hand corner. This creates an exact copy of the Fauria repository in your own repository.

Here's my forked version: https://github.com/Downes/docker-lamp You will be able to see the changes I made.

There are two major sets of changes (and this actually went pretty quickly). First, I needed to tell docker file tyo execute the commands needed to enable Perl for Apache. This included writing the configuration file. It's not best practice, but I did all my editing right in the GitHub web page. First, I created a file called cgi-enabled.conf (click on 'Add File' (right beside the green button)) and type or paste your code):

(I actually copied this from the existing serve-cgi-bin.conf file, which is why there was some extra content in there).

Next, I edited the Dockerfile and inserted several commands that would store the .conf file and enable CGI. Here's what I added:

RUN a2enmod cgid

RUN rm -f /etc/apache2/conf-available/serve-cgi-bin.conf

COPY cgi-enabled.conf /etc/apache2/conf-available/

RUN mkdir /var/www/html/cgi-bin

RUN a2enconf cgi-enabled

The RUN commands tell Docker to run a command inside the container. These are all the commands I had tried earlier to enable Perl. It also tells Docker to copy the .conf file to the right directory in the container. I also removed the existing serve-cgi-bin.conf file that tells Apache to use /usr/lib/cgi-bin for Perl scripts.

I also wanted to load a CGI test file. I didn't want to use the simple 'hello' script I used before; I have a longer script I use to test servers called server_test.cgi, so I created this file in GitHub (the same way I created cgi-enabled.conf). Here it is: https://github.com/Downes/docker-lamp/blob/master/server_test.cgi

Then I had to tell Docker to copy it to the container. Here are the commands in Dockerfile that do that:

COPY index.php /var/www/html/

COPY server_test.cgi /var/www/html/cgi-bin

RUN chmod 705 /var/www/html/cgi-bin/server_test.cgi

COPY run-lamp.sh /usr/sbin/

I use the COPY command to copy the script into the container, and the tell the container to RUN chmod 705 to give the .cgi script permission to execute. Notice the existing commands to copy index.php and run-lamp.sh - there were in the Fauria Dockerfile. The first is the index.php page we ran way back above, and the second is a shell script that runs once the container is running; it starts up all the servers and gets the container running.

I also wanted to install a number of Perl modules. The base image from Ubuntu contains some but by no means all. I used server_test.cgi to see what modules I still needed to install. Then I added a command in the Docker file to run the apt-get command in the container to install all these modules, as follows:

RUN apt-get install -y \

libcgi-session-perl \

libwww-perl \

libmime-types-perl \

libjson-perl \

libjson-xs-perl \

libtypes-datetime-perl \

libmime-lite-tt-html-perl

The -y option tells the system to enter 'yes' at any prompt, so the installation doesn't halt. The \ contrinues the command on the next line.

Finally, I wanted to test whether Perl could connect to the database. There's a section in server_test.cgi that does that. But it needs a database to run the test on. So I create a simple SQL file to define a database, and some commands in Dockerfile to set it up. I created grsshopper.sql the same way I created the other files and posted some SQL into it to create a single table.

Here are the lines I added to the Dockerfile:

COPY grsshopper.sql /var/www/html/cgi-bin/grsshopper.sql

RUN /bin/bash -c "/usr/bin/mysqld_safe &" && \

sleep 5 && \

mysql -u root -e "CREATE DATABASE grsshopper" && \

mysql -u root -e "grant all privileges on grsshopper.* TO 'grsshopper_user'@'localhost' identified by 'user_password'" && \

mysql -u root grsshopper < /var/www/html/cgi-bin/grsshopper.sql

As you can see, I copy the SQL file into the container, then run some mysql commands to create the database, create a user to access the database, and then run the SQL from the file in the database. Meanwhile, in server_test.cgi I made sure the database name, database user and database password were the same as I had defined in the Dockerfile (yes, I looked up to see whether there was a way in Pel to change this default configuration later, so you're not publishing your database credentials for the world to see).

Step 6 - Push to Docker

This was a ton of work. I ran all of this stuff manually first, then put it in the Dockerfile and ran it again, all to make sure it worked. And I never want to have to do this again. Sure, I have my scripts in GitHub. But I wanted to make the container available to whomever wanted to use it (this is the plan for gRSShopper as a whole).

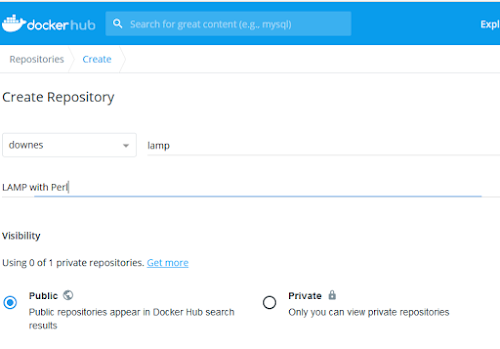

The instructions are here. First, I have to create an account on Docker, and then login from my Docker CE environment. I already have an account on docker.com so I used that. Next, still on the Docker website, I have to create the repository for my image. Click on the big 'Create' button on your Docker page:

Now back to the Docker CE environment running on Reclaim. To login, I enter the command:

docker login

and enter my username and password when prompted. Now to push my container.

docker tag bb1 downes/lamp

docker push downes/lamp

The tag bb1 is the tag I created for the image back when I built it. The second tag is the name of the repository I just defined on Docker (again, I had to try several versions of this, because the inbstructions on the Docker website are misleading).

Now I or anyone else can run my LAMP container with Perl and a database just by running a simple Docker pull command. Here it is:

docker pull downes/docker-lamp

(I ended up calling my new container docker-lamp instead of simply lamp).

Step 7 - Box gRSShopper

Now I am finally ready to move gRSShopper into a container. What I did was to fork my existing docker-lamp repository into a brand new gRSShopper repository (well, no, what I actually did was to use my existing gRSShopper repository, moving each file by hand, testing step-by-step every single file, because I've learned not to take anything for granted).

What I needed to do to box gRSShopper was the following:

- use an existing gRSShopper database

- use the existing gRSShopper Perl scripts

- fix them so they run properly in a container

Not so hard. gRSShopper has a built-in database backup function, and so I added it to the GitHib repository in place of the previous grashopper.sql (by deleting the existing one, and then uploading the file from desktop).

I used a bit of a hack to get the scripts and other files into the container (it turns out there's no easy way to copy an entire directory into a container). I went into the container (using docker exec -i -t f1 bash recall) and cloned the gRSShoper GitHub repository inside the container, then copied everything I needed, and gave the .cgi scripts permission to run:

git clone https://github.com/Downes/gRSShopper

cd grsshopper

cp -R html/* /var/www/html/*

cp -R cgi-bin/* /var/ww/html/cgi-bin

chmod 705 /var/www/html/cgi-bin/*.cgi

The -R forces the copy command to descent into subdirectories and copy them as well.

I haven't incorporated this into the Dockerfile yet, but I imagine some version of this will work.

To fix the scripts, I had to rewrite my login scripts to use sessions properly. I then added a bit at the end of server_test.cgi that would allow the user to create a new Admin account. Since not all of the Perl modules are yet loading, I had to edit the scripts to disable some functions.

Finally, I had to edit ple.htm to remove all references to https (because the container is running a non-secure version of Apache). I also had to edit the Javascript to allow for generic domain names (because every container has its own unique domain name).

With all of that I have a running gRSShopper server in a box. I tagged it and pushed it to Docker, and you can see it here: https://hub.docker.com/repository/docker/downes/grsshopper-ple

All you need to do to run it is to install Docker and then enter the following command:

docker run --publish 80:80 --detach --name gr1 grsshopper

Or you can build it yourself. But now, a short digression...

- git clone https://github.com/pclinger/docker-apache-perl.git

- cd docker-apache-perl

- docker build -t docker-apache-perl .

- docker run -v c:/www:/var/www/html -p 80:80 -d docker-apache-perl /usr/sbin/apache2ctl -D FOREGROUND

- Access http://localhost

Now, these would be what I would do if I were running Docker on my desktop instead of Reclaim. I have Docker installed; why am I not using my desktop, then? Because it's an NRC machine, and computing services has locked down all file-sharing, so the -v (volume) option will not work.

Reclaim does all of this for me. I open a Reclaim cloud environment, and then the container can share files with the cloud environment. So once the container is running, I can start using it right away. So let's give it an index page and test it.

Long story short, it didn't work. Why not? Look at the run command:

docker run -v c:/www:/var/www/html -p 80:80 -d docker-apache-perl /usr/sbin/apache2ctl -D FOREGROUND

The -v option handles file sharing. The format is -v local:container Now look at the local: c:/www. That's a Windows file location. But the Reclaim environment is Linux. So that has to be fixed. In the container I eventually used all the file sharing is Linux to Linux, so it worked out of the box.

End of digression... but if you're running it yourself, make sure your volumes are using the right kind of Directory names (check inside the Dockerfile as well).

Run from the GitHub repository as follows:

git clone https://github.com/Downes/grsshopper

(or git pull origin master if reloading the changed repo)

cd grsshopper

docker build --tag grsshopper .

docker run --publish 80:80 --detach --name gr1 grsshopper

Testing the server if you're running it from your desktop (if you're on Reclaim, just use the button):

http://localhost (should show gRSShopper start page)

http://localhost/cgi-bin/server_test.cgi (should show Perl test page)

If Perl CGI isn't running properly, try:

docker exec -it bb1 /etc/init.d/apache2 reload

(you can't docker exec -it bb1 apache2ctl restart because it crashes the entire container - see https://stackoverflow.com/questions/37523338/how-to-restart-apache2-without-terminating-docker-container )

( if you crash it, docker start bb3 )

Open a shell inside

docker exec -i -t gr1 bash

This comment has been removed by a blog administrator.

ReplyDelete