Working Memory and Object Permanence

This is pretty speculative, but it makes sense to me and I'd like to get the idea own on (digital) paper in case I don't get the chance to come back to it.

It is well known that we have different types of memory: working memory, short-term memory, and long-term memory. Sometimes the first two are thought of as the same; I've also seen writers distinguish between the two. It won't matter for the purposes of this particular item.

According to Wikipedia, working memory is "a cognitive system with a limited capacity that is responsible for temporarily holding information available for processing." The purpose of working memory is to support "reasoning and the guidance of decision-making and behavior."

This theory is based on an information-processing view of cognition and memory. On this picture, working memory is like a 'buffer' where operations can be conducted and where data can be temporarily stored until we decide whether it is relevant.

The theory of short-term memory is core to cognitive load theory. Here's a summary I read today (consistent with previous accounts I've seen, and which motivated the current post):

I've never really been satisfied with that account. It doesn't seem like a very efficient mechanism. It seems to rely far too much on what would need to be a pre-programmed cognitive structure. And my own working memory doesn't seem subject to the same sort of limitations; for example, I commonly keep 9-digit ID numbers or 10-digit phone numbers within easy short-term access. I can remember much longer sequences of letters. Am I some sort of genius?

Well, no. What I'm good at is chunking. Miller explains,

There's another problem. Earlier this year I saw a number of references to work showing that working memory doesn't act as a buffer at all. As the title of this item says, long-term memories aren't just short-term memories transferred into storage. Or as summarized,

That has been my thinking for a while now. But I am left working why we have working memory at all. We do have this parallel and very limited type of memory. Why?

Here is where I begin speculating in earnest.

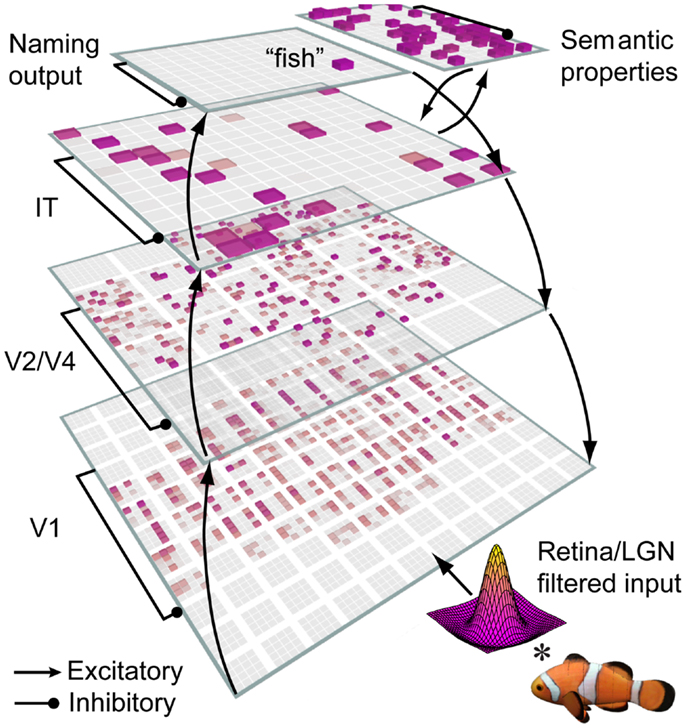

Visual processing is parallel and (mostly) non-linear. We receive inputs through the eyes; these are pre-processed through few neural layers in the retina and then sent as a lattice to the visual cortex. Through several more layers of neural network processing, the undifferentiated input is recognized as patterns, clusters and objects. This diagram from a paper on object recognition is a representation of that process:

What's worth noting that the bottom layer of this network - where it says 'Retina/LGN filtered input' is essentially a plot of physical space where the x and y axes corresponds to length and width (technically, it's a 'two and a half dimension' sketch, and some other visual properties, but the main point here is that it is physical space).

But what about persistence across time? All of our senses operate synchronously. That is, we perceive events when they happen, not before or after they happen. What we see is what is happening in front of us now. What we hear, smell, touch... same thing. So how do we distinguish between something that is fleeting and ephemeral and something that is (more or less) object-permanent?

Enter working memory. It works exactly the same as visual perception, except that the inputs are different. The x and y axes no longer correspond to physical dimensions. Instead, one of these axes is time, while the other is objects (where we can think of 'objects' as roughly equivalent to the (mis-named) 'semantic properties' that are the outcome of visual processing.

How do we get an input dimension that corresponds to time? I can think of several mechanisms - we might loop through the y-dimension of the input layer in sequence, so each degree would represent a different point in time. Or the values of the input neurons might decay at different rates, so that each neuron would represent a different instant in time. Whatever. The main point is: we perceive objects in time in the same way we perceive objects in space. And what we are (mistakenly) calling 'working memory' just is that perceptual process.

It stands to reason that working memory would be limited in just the way that visual or audio perception are limited. We can only perceive certain intervals of time, and we can only perceive a limited quantity of objects in that time. Hence, working memory appears to be short term and limited in scope. There's no reason why it couldn't be extended in both dimensions; it is subject to plasticity just as is any other area. But this would be an exceptional case, rather than the norm.

If my speculations are indeed accurate, we should be able to predict that the limitations of working memory (as we continue to call it until someone comes up with a better name) would apply to linear processes that take place over time, and especially tasks involving remembering sequences, language or reasoning. And we could predict that cognitive load isn't really a measure of the number of objects we are presented, but the length of time it takes to present the objects.

Anyhow, all this is - like I say - speculative. I have't seen anything like this in the literature anywhere, but if there's anything to this, it will not doubt have been discovered by now, and I'm just unaware of it. There may be a whole discipline devoted to it. Maybe people who have read this far can find related literature, or let me know where my thinking is wrong.

It is well known that we have different types of memory: working memory, short-term memory, and long-term memory. Sometimes the first two are thought of as the same; I've also seen writers distinguish between the two. It won't matter for the purposes of this particular item.

According to Wikipedia, working memory is "a cognitive system with a limited capacity that is responsible for temporarily holding information available for processing." The purpose of working memory is to support "reasoning and the guidance of decision-making and behavior."

This theory is based on an information-processing view of cognition and memory. On this picture, working memory is like a 'buffer' where operations can be conducted and where data can be temporarily stored until we decide whether it is relevant.

The theory of short-term memory is core to cognitive load theory. Here's a summary I read today (consistent with previous accounts I've seen, and which motivated the current post):

Long-term memory acts as an information repository or archive. This archive is organized by structures called schema, which are essentially series of interconnected mental models (Sweller, 2005). However, neither of these structures process new information. New information is processed through the working memory, which can only handle a finite amount of information at once...We're familiar with this concept of cognitive load. It's based on George A. Miller's paper on the "magical number" of 7 (plus or minus two). "The unaided observer is severely limited in terms of the amount of information he can receive, process, and remember."

I've never really been satisfied with that account. It doesn't seem like a very efficient mechanism. It seems to rely far too much on what would need to be a pre-programmed cognitive structure. And my own working memory doesn't seem subject to the same sort of limitations; for example, I commonly keep 9-digit ID numbers or 10-digit phone numbers within easy short-term access. I can remember much longer sequences of letters. Am I some sort of genius?

Well, no. What I'm good at is chunking. Miller explains,

We must recognize the importance of grouping or organizing the input sequence into units or chunks. Since the memory span is a fixed number of chunks, we can increase the number of bits of information that it contains simply by building larger and larger chunks.That sounds fine, but it suggests that the mechanisms underlying working memory are a lot more complex than the buffer analogy suggests. The buffer is for raw unprocessed input, but our working memory seems to be working with things that are already pre-processed. So there's something wrong with the buffer model.

There's another problem. Earlier this year I saw a number of references to work showing that working memory doesn't act as a buffer at all. As the title of this item says, long-term memories aren't just short-term memories transferred into storage. Or as summarized,

Traditional theories of consolidation may not be accurate, because memories are formed rapidly and simultaneously in the prefrontal cortex and the hippocampus on the day of training. “They’re formed in parallel but then they go different ways from there. The prefrontal cortex becomes stronger and the hippocampus becomes weaker,” Morrissey says.Now the effect of both of theser things - the pre-processing of working memory, and the parallel creation of working memory and long-term memory - suggest that the application of cognitive-load theory in education is mistaken. The suggestion is that the limitations of working memory mean that "poorly designed instructional sequences can... cause learners to exert more mental effort to learn the material than should have been required." But if working memory doesn't play a key bottleneck role in learning and memory, the impact of poorly-designed educational material seems to be lessened.

That has been my thinking for a while now. But I am left working why we have working memory at all. We do have this parallel and very limited type of memory. Why?

Here is where I begin speculating in earnest.

Visual processing is parallel and (mostly) non-linear. We receive inputs through the eyes; these are pre-processed through few neural layers in the retina and then sent as a lattice to the visual cortex. Through several more layers of neural network processing, the undifferentiated input is recognized as patterns, clusters and objects. This diagram from a paper on object recognition is a representation of that process:

What's worth noting that the bottom layer of this network - where it says 'Retina/LGN filtered input' is essentially a plot of physical space where the x and y axes corresponds to length and width (technically, it's a 'two and a half dimension' sketch, and some other visual properties, but the main point here is that it is physical space).

But what about persistence across time? All of our senses operate synchronously. That is, we perceive events when they happen, not before or after they happen. What we see is what is happening in front of us now. What we hear, smell, touch... same thing. So how do we distinguish between something that is fleeting and ephemeral and something that is (more or less) object-permanent?

Enter working memory. It works exactly the same as visual perception, except that the inputs are different. The x and y axes no longer correspond to physical dimensions. Instead, one of these axes is time, while the other is objects (where we can think of 'objects' as roughly equivalent to the (mis-named) 'semantic properties' that are the outcome of visual processing.

How do we get an input dimension that corresponds to time? I can think of several mechanisms - we might loop through the y-dimension of the input layer in sequence, so each degree would represent a different point in time. Or the values of the input neurons might decay at different rates, so that each neuron would represent a different instant in time. Whatever. The main point is: we perceive objects in time in the same way we perceive objects in space. And what we are (mistakenly) calling 'working memory' just is that perceptual process.

It stands to reason that working memory would be limited in just the way that visual or audio perception are limited. We can only perceive certain intervals of time, and we can only perceive a limited quantity of objects in that time. Hence, working memory appears to be short term and limited in scope. There's no reason why it couldn't be extended in both dimensions; it is subject to plasticity just as is any other area. But this would be an exceptional case, rather than the norm.

If my speculations are indeed accurate, we should be able to predict that the limitations of working memory (as we continue to call it until someone comes up with a better name) would apply to linear processes that take place over time, and especially tasks involving remembering sequences, language or reasoning. And we could predict that cognitive load isn't really a measure of the number of objects we are presented, but the length of time it takes to present the objects.

Anyhow, all this is - like I say - speculative. I have't seen anything like this in the literature anywhere, but if there's anything to this, it will not doubt have been discovered by now, and I'm just unaware of it. There may be a whole discipline devoted to it. Maybe people who have read this far can find related literature, or let me know where my thinking is wrong.

Re: "What we see is what is happening in front of us now. "

ReplyDeleteWell, technically what we see is a model of what is in front of us, and often this model can leave things out or add things we think are there. This seems especially true when we are distracted in some manner. See the gorilla effect where the task of counting the number of times a ball is passed between players can cause the viewer to miss someone in a gorilla suit.

Good comment. It catches me in some loose language.

ReplyDeleteThe sense I wanted to convey with that sentence is that what we perceive is in the present only, not the past or the future.

The reference to 'what is in front of us' was used as an expression only, and not to convey an endorsement of object realism, which is a separate issue and debate.